Generative AI and Information Governance: A Practitioner's Guide

The information governance landscape is undergoing its most significant transformation since the advent of cloud computing. Generative AI—the same technology powering tools like ChatGPT and Claude—is rapidly moving from novelty to necessity in how organizations manage, classify, and protect their data assets. For those of us who have spent decades wrestling with the challenges of compliance, records retention, and e-discovery, this shift represents both an unprecedented opportunity and a call to fundamentally rethink our assumptions.

Information governance has always been about bridging the gap between what organizations should do with their data and what they actually do. That gap has traditionally been filled by policies, procedures, training, and hope. Generative AI changes the equation entirely.

Where traditional automation could follow rules, generative AI can understand context. It can read a document the way a paralegal does—grasping not just keywords but meaning, relationships, and implications. This capability transforms the core functions of information governance:

Classification and Taxonomy Management. Instead of relying on users to manually tag documents or rigid rule-based systems that miss nuance, generative AI can analyze content semantically. A contract amendment doesn't need to contain the word "amendment" to be recognized as one. The AI understands what makes an amendment an amendment.

Policy Interpretation. Retention schedules and compliance requirements are notoriously difficult to apply consistently across an organization. Generative AI can interpret these policies against actual content, making real-time recommendations that account for the specific context of each document.

Knowledge Discovery. Perhaps most powerfully, generative AI can surface connections and insights that would otherwise remain buried. It can identify that three seemingly unrelated documents from different departments all relate to the same regulatory obligation—a discovery that might take a human analyst weeks of review.

Let me be clear about something: generative AI doesn't replace information governance frameworks—it supercharges them. The fundamentals remain essential. You still need defensible retention policies, clear ownership structures, and robust audit trails. What changes is how effectively you can execute against those requirements.

Consider the typical compliance workflow for a financial services firm. Historically, ensuring regulatory compliance meant sampling—reviewing a statistically significant subset of communications and hoping that sample was representative. Generative AI enables comprehensive review at scale. Every email, every Teams message, every document can be analyzed against compliance requirements in near real-time.

The implications for risk management are profound. Instead of discovering compliance gaps during audits or, worse, during litigation, organizations can identify and remediate issues proactively. The shift from reactive to predictive compliance isn't just more efficient—it's fundamentally less risky.

None of this comes without significant challenges. Integrating generative AI into existing information systems is far more complex than deploying a new application.

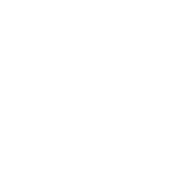

The first hurdle is data architecture. Generative AI needs access to your data to work with it, which immediately raises questions about data residency, access controls, and system integration. Most organizations have information scattered across dozens of repositories—SharePoint, file shares, legacy ECM systems, email archives, collaboration platforms. Creating a coherent integration layer that allows AI to access this distributed landscape while maintaining security boundaries is a non-trivial engineering challenge.

Then there's the question of model selection and deployment. Do you use cloud-based services, accepting that your data will be processed on someone else's infrastructure? Do you deploy on-premises models, accepting the computational overhead and maintenance burden? Do you fine-tune general models on your specific domain, or rely on prompt engineering and Retrieval-Augmented Generation (RAG)? RAG has emerged as particularly valuable for information governance, allowing AI systems to ground their responses in your organization's actual documents and policies rather than relying solely on general training data. Each choice carries tradeoffs in cost, capability, and control.

Perhaps the most underestimated challenge is change management. Generative AI doesn't just change tools—it changes workflows. Records managers who have spent their careers developing classification expertise must now learn to supervise and validate AI-generated classifications. Compliance officers must understand enough about model behavior to meaningfully audit AI-driven decisions. This transition requires investment in training and, frankly, a willingness to rethink job roles.

Any discussion of generative AI in information governance must grapple seriously with privacy and security. These aren't features to be added later—they're architectural requirements that must be addressed from the outset.

The training data problem is well-documented. Early generative AI services learned from user inputs, creating the risk that sensitive organizational data could influence model outputs for other users. While major providers have addressed this concern with enterprise tiers that don't train on customer data, organizations must verify these protections contractually and technically before deployment.

Data residency adds another layer of complexity, particularly for organizations subject to GDPR, CCPA, or sector-specific regulations. If your generative AI service processes European personal data in a US data center, you may have a compliance problem regardless of how sophisticated the AI is.

Access control is perhaps the most subtle challenge. If you connect a generative AI system to your document repositories, how do you ensure it respects existing permissions? A powerful AI that can answer any question about your organization's documents is a security liability if it answers those questions without regard to who's asking. Implementing permission-aware AI requires careful integration with identity management systems and ongoing validation that access controls are being honored.

My recommendation: treat AI integration as you would any other system handling sensitive data. Conduct thorough risk assessments. Engage your security team early. Document your technical and administrative controls. And maintain the ability to audit what data the AI has accessed and what outputs it has generated.

For organizations invested in the Microsoft ecosystem, the integration landscape has evolved rapidly. Microsoft 365 Copilot now embeds generative AI directly into the applications where knowledge workers spend their time—Word, Excel, PowerPoint, Outlook, and Teams. For information governance professionals, this means AI is no longer a separate system to integrate; it's already present in the productivity tools your users rely on daily.

Microsoft Purview has similarly evolved, incorporating AI capabilities for data classification, sensitivity labeling, and compliance monitoring. Purview's AI-powered classifiers can identify sensitive information types and apply protection policies with greater accuracy than traditional pattern matching. The integration between Copilot and Purview creates a governance layer that can track how AI interacts with organizational data, providing the audit trails that compliance requires.

However, this embedded approach also introduces new governance challenges. When AI is woven into every document interaction, organizations must think carefully about data exposure, prompt injection risks, and the potential for AI to surface information that users shouldn't access. The convenience of native integration doesn't eliminate the need for thoughtful governance architecture.

For those of us in the e-discovery trenches, generative AI represents the most significant advancement in decades. The traditional e-discovery workflow—collection, processing, review, analysis, production—is being fundamentally reimagined.

Early Case Assessment. Generative AI can rapidly analyze a document population to provide insights about key themes, custodians, and date ranges. What once required days of manual sampling can now be accomplished in hours, enabling more informed decisions about case strategy and scope.

Document Review. This is where the efficiency gains are most dramatic. Generative AI can prioritize documents for review, suggest coding decisions, and identify conceptually similar documents across the review set. First-pass review that previously required dozens of contract reviewers can now be accomplished by a fraction of that team, with the AI handling routine documents and escalating complex or ambiguous items for human review.

Privilege Analysis. Identifying privileged documents has always been particularly challenging—it requires understanding relationships, context, and legal principles. Generative AI can flag potentially privileged documents with surprising accuracy, dramatically reducing the risk of inadvertent privilege waiver.

Records management sees similar benefits. Automated classification reduces the burden on end users, who have never been reliable sources of accurate metadata. Intelligent retention application ensures records are disposed of appropriately, reducing both storage costs and legal risk. And continuous compliance monitoring catches issues before they become problems.

The abstract benefits of generative AI become concrete when you examine actual deployments. While many implementations remain confidential, several patterns have emerged from organizations at the forefront of adoption.

Large law firms are deploying AI-powered review platforms that combine generative AI with traditional technology-assisted review. These systems learn from reviewer decisions, progressively improving accuracy while simultaneously reducing the volume requiring human review. Firms report significant review cost reductions on large matters—often in the range of 30-50%—with quality metrics that match or exceed traditional approaches, though results vary considerably based on matter complexity and implementation maturity.

Financial institutions are using generative AI for communications surveillance, moving beyond keyword-based monitoring to semantic analysis that can detect potential compliance violations even when employees carefully avoid obvious trigger words. The same institutions are applying these capabilities to customer correspondence, automatically identifying complaints, regulatory inquiries, and other communications requiring special handling.

Healthcare organizations are leveraging AI for medical records management, automatically identifying and classifying sensitive health information, ensuring appropriate access controls, and facilitating compliance with HIPAA and state privacy requirements.

Government agencies—traditionally conservative technology adopters—are increasingly piloting generative AI for FOIA request processing, records scheduling, and correspondence management.

Predicting technology evolution is a humbling exercise, but certain trajectories seem reasonably clear.

Your SharePoint implementation, your e-discovery platform, your ECM system—all will incorporate AI capabilities as standard features rather than premium add-ons. The question will shift from "should we use AI?" to "how do we govern the AI that's already present in our systems?"

Today's systems are primarily text-focused, but AI that can analyze images, audio, and video with similar sophistication is advancing rapidly. For information governance, this means extending automated classification and compliance monitoring to the full range of organizational content.

Rather than flagging a retention violation for human remediation, the AI will be able to execute the remediation directly, with appropriate oversight and audit trails. Frameworks like LangGraph, AutoGen, and CrewAI are already enabling developers to build AI agents that can orchestrate complex, multi-step workflows. In the governance context, this means agents that can identify a compliance issue, gather relevant context from multiple systems, draft a remediation plan, execute approved actions, and document the entire process—all while maintaining the audit trails and human oversight checkpoints that compliance requires.

We're already seeing AI-specific regulations emerge globally. Organizations that build governance frameworks now, with adaptability in mind, will be better positioned to comply with requirements we can't yet fully anticipate.

Several misconceptions about generative AI persist in professional circles, and addressing them directly is worthwhile.

This fundamentally misunderstands both the technology and the discipline. Generative AI augments human capabilities—it doesn't replace the judgment, accountability, and strategic thinking that effective governance requires. The professionals who thrive will be those who learn to leverage AI as a force multiplier for their expertise.

While AI 'explain-ability' remains an active research area, modern enterprise AI systems provide substantial transparency into their decision-making. More importantly, the same sampling and audit techniques we use to validate human decision-making can be applied to AI outputs. The key is building these validation processes into your workflow.

Privacy and security concerns are legitimate but addressable. Enterprise AI solutions with appropriate data handling, access controls, and contractual protections can be deployed even in highly regulated environments. The question isn't whether to use AI, but how to use it safely.

The technology is mature enough for production deployment today. Organizations waiting for some future state of perfection are falling behind competitors who are learning, iterating, and building institutional expertise now.

The pace of AI advancement creates a genuine challenge for professionals trying to stay current. A few strategies have proven effective:

ARMA International, the Sedona Conference, and similar bodies are publishing guidance and hosting discussions specifically focused on AI applications in records management, e-discovery, and compliance.

Even if you're not ready to deploy, understanding what's possible—and what's coming—helps inform your strategic planning.

The conceptual understanding you gain from using consumer AI applications transfers meaningfully to evaluating enterprise solutions.

The professionals who will lead in this space are those who approach AI with curiosity rather than fear, who are willing to experiment, and who view capability gaps as opportunities for growth rather than threats.

No serious treatment of AI in information governance can ignore the ethical dimensions. These technologies create genuine dilemmas that require thoughtful navigation.

Bias and fairness deserve particular attention. AI systems learn from historical data, which may embed historical biases. If your training data reflects past discriminatory patterns—in hiring, lending, or other decisions documented in your records—your AI may perpetuate those patterns. Active testing and monitoring for bias is essential.

Transparency to affected parties raises important questions. When AI plays a significant role in decisions affecting individuals—employment actions, benefits determinations, legal outcomes—what disclosure is appropriate? Legal requirements vary, but ethical obligations may extend beyond legal minimums.

Accountability becomes more complex when AI is in the loop. If an AI system makes an error with significant consequences, who is responsible? The technology vendor? The organization that deployed it? The individual who relied on its output? Clear accountability frameworks should be established before deployment, not after an incident.

Human dignity warrants consideration as well. The efficiency of AI can be seductive, but we should be thoughtful about which decisions we're comfortable delegating to machines and which require human judgment, regardless of efficiency considerations.

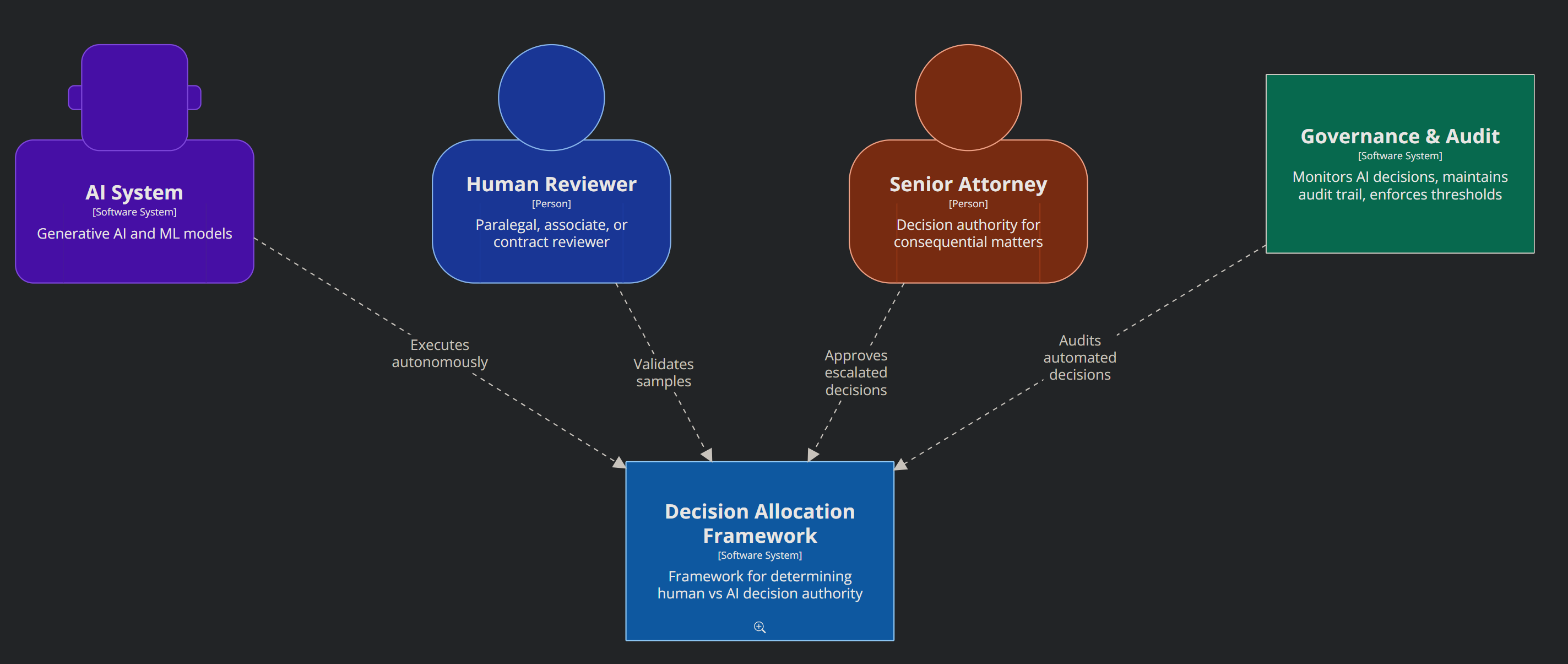

The relationship between human judgment and AI capability is nuanced. Neither "AI decides everything" nor "humans decide everything, AI just helps" captures the right model.

For routine, high-volume decisions with clear criteria—basic document classification, straightforward retention application, initial relevance screening—AI can and should handle the workload with minimal human intervention. The efficiency gains are too significant to ignore, and human attention is better directed elsewhere.

For consequential decisions with significant stakes—privilege determinations, litigation strategy, policy exceptions—human judgment remains essential. AI should inform these decisions, providing analysis and recommendations, but the final call should rest with accountable humans.

For ambiguous cases in between, a collaborative model works best. AI handles initial analysis and flags items requiring human attention. Humans review, decide, and provide feedback that improves future AI performance. Over time, the boundary between "AI handles" and "human reviews" can shift based on demonstrated AI reliability.

The key is intentionality. Organizations should consciously decide what role AI plays in each decision type, rather than letting that allocation happen by default.

For organizations beginning their generative AI journey in information governance, several principles improve the likelihood of success:

Start with a well-defined use case. The organizations that struggle are those that try to "implement AI" as an abstract initiative. Successful implementations target specific problems—reducing review costs for a particular matter type, automating classification for a specific content repository, improving response time for records requests.

Ensure data readiness. AI can only work with data it can access. Before focusing on AI capabilities, assess whether your underlying data architecture supports integration. If your content is scattered across siloed systems with inconsistent metadata, address that foundation first.

Involve stakeholders early. IT, legal, compliance, records management, security, and business operations all have legitimate interests in how AI is deployed. Cross-functional involvement from the outset prevents costly mid-project pivots.

Plan for governance of the AI itself. Who decides what the AI is allowed to do? How are changes managed? How is performance monitored? How are issues escalated? AI requires governance just like any other information system.

Commit to iterative improvement. Initial deployments will not be perfect. Build in mechanisms for feedback, learning, and continuous enhancement. The organizations extracting the most value from AI are those that treat deployment as the beginning of a journey rather than a destination.

Generative AI is not a future consideration for information governance—it's a present reality. Organizations that engage thoughtfully with this technology will find themselves better positioned to manage information risk, reduce compliance costs, and extract value from their data assets. Those that wait will find themselves increasingly disadvantaged relative to more agile competitors.

The path forward requires neither uncritical enthusiasm nor reflexive caution. It requires informed engagement: understanding what generative AI can and cannot do, implementing it with appropriate safeguards, and continuously adapting as both the technology and regulatory landscape evolve.

For those of us who have dedicated our careers to information governance, this is a moment of remarkable opportunity. The challenges we've wrestled with for decades—consistent classification, reliable compliance, manageable discovery costs—are finally yielding to technological solutions that actually work. The question is no longer whether these problems can be solved, but how quickly we can bring solutions to our organizations and clients.

The future of information governance is here. It's time to engage.